It is called the basketball exercise, or sometimes inattention blindness, and it demonstrates a real problem in many kinds of automated testing.

What is this thing, you ask? It can be hard to explain, but I can show you in about a minute. Look, there’s video:

Did you watch the video? Give it a try, don’t scroll down, then come back.

Now did you watch the video? Seriously? Ok.

Here’s the thing. Say I am testing an application by hand, running down feature by feature, perhaps following a checklist or set of directions. The more detailed the directions, the higher the chance that I focus solely on the directions and miss something else — something important, but not planned for, with no written directions.

This leads to my statement that at the end of every test instruction is a second instruction, a hidden expected result that “… and nothing else funny happened.”

It turns out that computers are pretty bad at evaluating that last condition.

The Automated Approach

For regression testing, there are two common approaches: Record/Playback, where historically the tester captures a literal window or region of the screen, which is converted into an image. The next time the test runs, the application grabs the window again and compares it to that image. Move the browser, change the screen resolution, add a button, have a date in the lower-right corner, whatever it is, if you make a change, the software will render an error.

The second approach is keyword-driven, where you tell the software exactly what to click, click, type, click, check for exact string or text. With keyword driven, you don’t get the false errors problem, but instead you get exactly the count of the basketballs.

With a keyword driven test automation approach, missing the dancing bear isn’t a risk; it is guaranteed. That’s okay — we recognize that problem, count on our regression tools to count the basketballs, and check for the dancing bear in other ways.

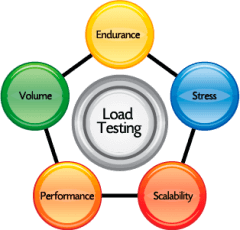

But that’s traditional regression testing. Let’s talk about Performance and Load.

Performance Problems

Back in the bad old days, (okay, the 1990’s), when computers were slow, memory expensive, and bandwidth a real problem, performance testing was hard. Running more than one or two simultaneous browsers was a real problem; they took up too much CPU. Simulating a performance test through a browser was extremely hard. For that matter, the ‘easy to program with’ network stacks had too much overhead. That’s no problem if you have four tabs open in your browser, but when you are trying to simulate a thousand simultaneous connections, you really want the lowest-memory, smallest-CPU footprint — something with no GUI at all. Use the application in a browser, record the traffic, then run it a thousand times through a tool with no GUI.

At least, that was the competitive strategy of many tool vendors in the 90’s: Make a network stack so small, and so thin, that you could run your performance tests all on one machine. (The alternative is to have one ‘master’ and many ‘slave’ machines. In the 90’s, you needed a lab.)

So the fight in the 90’s was who could make the thinnest stack, so you could scale one machine to the highest number of simulated users.

What does this have to do with the basketball?

Well, if you are just simulating traffic and checking error codes, not data, there is a good chance that by repeating recorded traffic, you are repeating invalid traffic … and don’t know it.

The load testing tool might be counting basketballs and missing the moonwalking bear.

Yesterday’s Fix

The classic fix for this was to check the server back-end, to see if real transactions were coming through. If real transactions are coming through, and you want just a little bit more confidence, you could have a real human being try to use the application, stopping periodically to mark down how they feel about speed on the Wong Pain Scale:

The advantage of the Wong Pain scale is that it is subjective; it is based on how the customers actually feel, not the number of seconds it takes messages to get from system A to system B. Using a real front-end also measures the whole transaction, from request through the server, back to the client and including front-end rendering. ‘Headless’ transaction playback might have kept the number of machines low, but it would never tell you that the javascript takes a long time to process in FireFox.

Over time, performance expectations change; what was wonderful performance in the age of the dial up modem may be considered horrible today. Using a pain scale allows the testers (ideally, potential real users of the application) to make a subjective assessment of the whole delivery, end-to-end.

It is also kind of expensive, and sometimes awkward, to ask the customers to drop by the test lab to run scripts for a couple of hours and take notes. They are likely to say “hey man, you’re the technical staff. Just build it fast enough.”

This option still doesn’t tell us if the initial load is valid. Sure, we can search through server logs and try to figure it out, but now we’re hunting for the dancing bear. (At press time, SmartBear Software had just launched an infographic claiming that “lack of visibility into on-screen transactions” was a “major concern” for 40% of DevOps Professionals. In other words: They have pretty graphs to model response time, but don’t have an understanding or deep feel for what the users are actually experiencing.)

Another Choice

Fifteen years after the load testing wars, computers are fast. Thanks to server virtualization; you can run a half-dozen different instances of a browser on one machine. Thanks to tools like terminal server, you can run a hundred. You also don’t need to buy a hundred servers for your test lap; you can go to the cloud and rent a hundred servers for an hour each for around a hundred dollars US.

Instead of worrying about if the playback is valid, you watch the test run, perhaps jumping virtual machines along the way. Instead of having users run scripts, they watch the execution in a real, full-stack browser.

This choice will cost more money, but it limits the dancing bear problem.

Right Now

I have listed three different approaches to load testing web applications. It is not a complete list; if your application needs to scale to the customer service department, you could just buy the customer service department pizza lunch for one day.

Now I’ve listed four.

Still, it’s time to wrap this post up, and to hear from you. What am I missing? How do you decide between approaches? What additional problems do you see each approach bringing?

Talk to me, goose.

Who knows, we may even get somewhere. Wouldn’t that be nice?