Today I’ll cover the story of project number two, “just a little code” to integrate two systems. But first, let’s talk about my motivations.

Matthew Heusser

Matthew Heusser

The Killer App Finale

Last week I posted The Killer App, the story of a real software project with a cliff-hanger, pick-a-path ending. It’s time to close the loop. “The Killer App” was a project that was not just late, but hopeless; a claims processing system that could not process a single claim end-to-end. When I suggested that we … Read more

More On The Test Competition

Over the past two weeks I have spent a great deal of time doing research, gathering resources, and judging interest in an on-line test competition in software testing. My conclusion is kind of like Nike.

Over the past two weeks I have spent a great deal of time doing research, gathering resources, and judging interest in an on-line test competition in software testing. My conclusion is kind of like Nike.

The Killer App

We called it the Killer App, and, for the insurance industry, it was magic.

We called it the Killer App, and, for the insurance industry, it was magic.

A Tale of Two Performance Projects

Imagine for a moment two companies have an identical problem – the website is slow. Perhaps this is a huge problem; the company bet it’s future on a new product, that customers sign up for on the web, and performance is bad enough that people are abandoning the site and going to the competition. In a few more months, the competition will ‘own’ the market, and the percentage of customers that are even worth fighting over will be a small percentage of the total – more like 9% than the 90% of market share hoped for in the initial offering.

Imagine for a moment two companies have an identical problem – the website is slow. Perhaps this is a huge problem; the company bet it’s future on a new product, that customers sign up for on the web, and performance is bad enough that people are abandoning the site and going to the competition. In a few more months, the competition will ‘own’ the market, and the percentage of customers that are even worth fighting over will be a small percentage of the total – more like 9% than the 90% of market share hoped for in the initial offering.

System Shenanigans

Last week I put out the Black Swan In The Enterprise, which argued that most uptime planning comes from the ability to predict the future — and sometimes, the future is wildly different that you would have predicted. Things fall apart; the centre does not hold — and they fall apart very differently than what the team planned for up front. If that’s the case, what’s the company to do, and how can Operating Systems promise those 99.995% uptimes and take themselves seriously?

Last week I put out the Black Swan In The Enterprise, which argued that most uptime planning comes from the ability to predict the future — and sometimes, the future is wildly different that you would have predicted. Things fall apart; the centre does not hold — and they fall apart very differently than what the team planned for up front. If that’s the case, what’s the company to do, and how can Operating Systems promise those 99.995% uptimes and take themselves seriously?

An Online Test Competition

When the staff at NRGGlobal asked me to start blogging for them, they asked what we had in common, and what they could do for the test community.

When the staff at NRGGlobal asked me to start blogging for them, they asked what we had in common, and what they could do for the test community.

Yugo Testing

Recently, I was invited to observe a colleague’s monthly stress test exercise. There is a large room filled with mangers and technicians, experts in various aspects of the system, general network specialists, application specialists, customer support representatives and a collection of people with backgrounds in various aspects of testing.

The Black Swan In The Enterprise

Amazon’s Service level agreement for it’s cloud computer service is 99.95% uptime for a service region (that’s a data center) during a one year period.

Amazon’s Service level agreement for it’s cloud computer service is 99.95% uptime for a service region (that’s a data center) during a one year period.

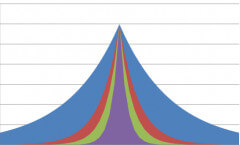

Deviant Deviation

A few years ago, I worked a performance project. You probably know the type: Customers were upset, executives were upset, technical staff were asking for specific direction (and not getting much) … nobody was happy.

The code was already in production, and there was no obvious ‘roll it back to the previous version that is just fine’ trick for us to pull. It was pretty bad.

There are worse projects. When the technical staff starts pointing fingers at each other, then you know nothing is going to get done. At least we had hope.

Then came the fateful day. The CEO was on the conference call, and he said, very sincerely, “We’ve got problems with the website … we really need some more testing.”

At this, the director of product quality perked up. “I have to politely disagree. Testing will tell us if the product is slow or not. We already know the answer to that question: It is slow. In addition to knowing it is slow, we know what parts are slow: We have gigabytes and gigabytes of performance data to look at. It occurs to me that we need something else, other than performance testing; we need performance fixing.”

He was right. We did get to fixing, but the next step was to analyze that performance data, to find opportunities for improvement.

It turns out that analyzing can be surprisingly challenging. More about that today.